Since we used a blocksize (bs) of 4M, the once 2G image file is now a mere 4M: $ dd if=/dev/zero of=/scratch/2.img bs=4M count=1 2>/dev/null

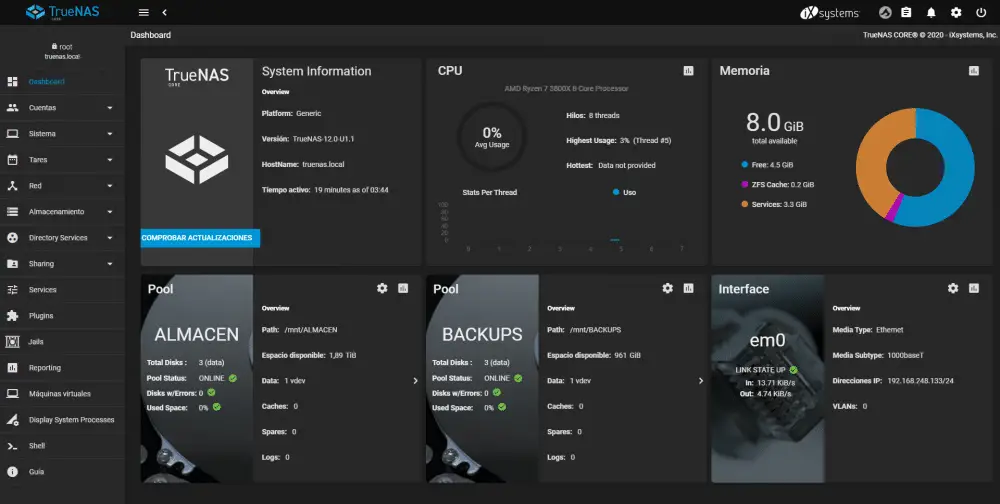

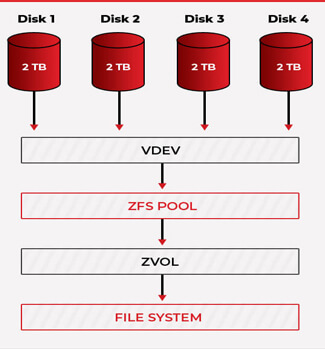

one of the HDDs in the zpool stops functioning), zero out one of the VDEVs. To simulate catastrophic disk failure (i.e. Simulate a disk failure and rebuild the Zpool # zfs get compressratio NAME PROPERTY VALUE SOURCE In this example, the linux source tarball is copied over and since lz4 compression has been enabled on the zpool, the corresponding compression ratio can be queried as well. Using a setting of simply 'on' will call the default algorithm (lzjb) but lz4 is a nice alternative. ZFS uses many compression types, including, lzjb, gzip, gzip-N, zle, and lz4. For this example, first enable compression. Tip: This option like many others can be toggled off when creating the zpool as well by appending the following to the creation step: -O atime-off Add content to the Zpool and query compression performanceįill the zpool with files. # zfs get atime NAME PROPERTY VALUE SOURCE Verify that the property has been set on the zpool: To see the current properties of a given zpool:ĭisable the recording of access time in the zpool: Without specifying them in the creation step, users can set properties of their zpools at any time after its creation using /usr/bin/zfs. If you have large amounts of data, by separating by datasets, its easier to destroy a dataset than to try and wait for recursive file removal to complete. Note, there is a huge advantage(file deletion) for making a 3 level dataset. now list the datasets (this was a linear span).# zfs create -p -o compression=on san/vault/redtail/c/Users # zfs create -o compression=on san/vault/falcon/version # zfs create -p -o compression=on san/vault/falcon/snapshots Scan: scrub repaired 0 in 4h22m with 0 errors on Fri Aug 28 23:52:55 2015Īn example creating child datasets and using compression:

# zpool create zpool san /dev/sdd /dev/sde /dev/sdf

#ZFS RAID LEVEL OPENZFS FULL#

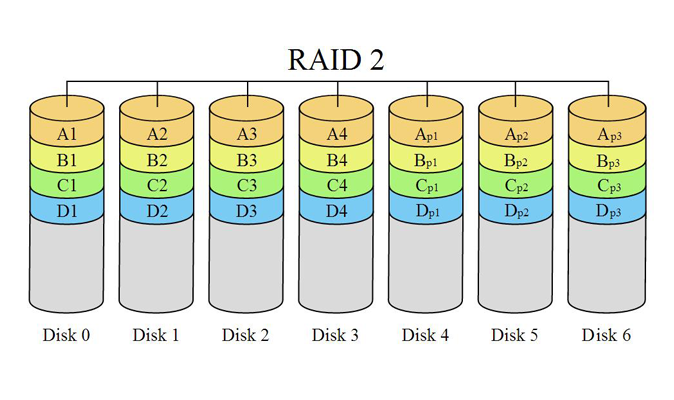

RaidZ will be your better bet once you achieve enough space to satisfy, since this setup is NOT taking advantage of the full features of ZFS, but has its roots safely set in a beginning array that will suffice for years until you build up your hard drive collection. This setup is for a JBOD, good for 3 or less drives normally, where space is still a concern and you are not ready to move to full features of ZFS yet because of it. Higher level ZRAIDs can be assembled in a like fashion by adjusting the for statement to create the image files, by specifying "raidz2" or "raidz3" in the creation step, and by appending the additional image files to the creation step.

# zfs list NAME USED AVAIL REFER MOUNTPOINT Notice that a 3.91G zpool has been created and mounted for us: # zpool create zpool raidz1 /scratch/1.img /scratch/2.img /scratch/3.img $ for i in do truncate -s 2G /scratch/$i.img done This example will use the most simplistic set of (2+1).Ĭreate three x 2G files to serve as virtual hardrives: This is for storage space efficiency and hitting the "sweet spot" in performance.

#ZFS RAID LEVEL OPENZFS PLUS#

It is best to follow the "power of two plus parity" recommendation. The minimum number of drives for a RAIDZ1 is three. Mirroring can also be used as an alternative to Raidz setups with surprising results. Management of ZFS is pretty simplistic with only two utils needed:įor zpools with just two drives with redundancy, it is recommended to use ZFS in mirror mode which functions like a RAID1 mirroring the data. Details are provided on the ZFS#Installation article.

The requisite packages are available in the AUR and in an unofficial repo.

0 kommentar(er)

0 kommentar(er)